I repost here the rules from my old blog at SDN, because I think they are a more compact description of the memory usage for java objects.

The general rules for computing the size of an object on the SUN/SAP VM are :

32 bit

Arrays of boolean, byte, char, short, int: 2 * 4 (Object header) + 4 (length-field) + sizeof(primitiveType) * length -> align result up to a multiple of 8

Arrays of objects: 2 * 4 (Object header) + 4 (length-field) + 4 * length -> align result up to a multiple of 8

Arrays of longs and doubles: 2 * 4 (Object header) + 4 (length-field) + 4 (dead space due to alignment restrictions) + 8 * length

java.lang.Object: 2 * 4 (Object header)

other objects: sizeofSuperClass + 8 * nrOfLongAndDoubleFields + 4 * nrOfIntFloatAndObjectFields + 2 * nrOfShortAndCharFields + 1 * nrOfByteAndBooleanFields -> align result up to a multiple of 8

64 bit

Arrays of boolean, byte, char, short, int: 2 * 8 (Object header) + 4 (length-field) + sizeof(primitiveType) * length -> align result up to a multiple of 8

Arrays of objects: 2 * 8 (Object header) + 4 (length-field) + 4 (dead space due to alignment restrictions) + 8 * length

Arrays of longs and doubles: 2 * 8 (Object header) + 4 (length-field) + 4 (dead space due to alignment restrictions) + 8 * length

java.lang.Object: 2 * 8 (Object header)

other objects: sizeofSuperClass + 8 * nrOfLongDoubleAndObjectFields + 4 + nrOfntAndFloatFields + 2 * nrOfShortAndCharFields + 1 * nrOfByteAndBooleanFields -> align result up to a multiple of 8

Note that an object might have unused space due to alignment at every inheritance level (e.g. imagine a class A with just a byte field and class B has A as it's superclass and declares a byte field itself -> 14 bytes 'wasted on 64 bit system).

In practice 64 bit needs 30 to 50% more memory due to references being twice as large.

[UPDATE]

How much memory does a boolean consume?

Coming back to the question of how much a boolean consumes, yes it does consume at least one byte, but due to alignment rules it may consume much more. IMHO it is more interesting to know that a boolean[] will consume one byte per entry and not one bit,plus some overhead due to alignment and for the size field of the array. There are graph algorithms where large fields of bits are useful, and you need to be aware that, if you use a boolean[] you need almost exactly 8 times more memory than really needed (1 byte versus 1 bit).

Alternatives to boolean[]

To store large sets of booleans more efficiently a standard BitSet can be used. This will pack the bits into a compact representation that only uses one bit per entry (plus some fixed overhead)

For the Eclipse Memory Analyzer we had the need to use large fields of booleans (Millions of entries) and we know in advance how many entries we need.

We therefore implemented the class BitField that is faster than BitSet.

Still we only use this class on single core machines, because the disadvantage of it is that it is not thread save. On multicore machines we use boolean[] and I will explain in a later post how that works, because it's pretty tricky.

Note that these rules tell you only the flat (=shallow) size of an object, which is in practice pretty useless.

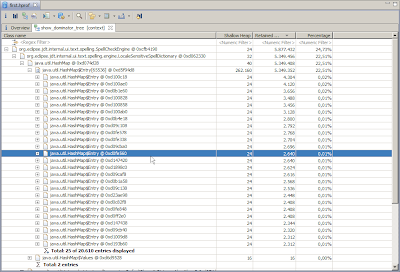

I will explain in my next post, how you can better measure how much memory your Java objects consume.