Today I will show you how easy this kind of analysis is with the Eclipse Memory Analyzer.

I first started Eclipse 3.4 M7 (running on JDK 1.6_10) with one project "winstone" which includes the source of the winstone project(version 0.9.10):

Then I did a heap dump using the JDK 1.6 jmap command :

Since this was a relatively small dump (around 45Mbyte) the Memory Analyzer would parse and load it in a couple of seconds :

In the "Overview" page I already found one suspect. The spellchecker (marked in red on the screen shot) takes 5.6Mbyte (24,6%) out of 22,7 Mbyte overall memory consumption!

That's certainly too much for a "non core" feature.

[update:] In the mean time submitted a bug (https://bugs.eclipse.org/bugs/show_bug.cgi?id=233156)

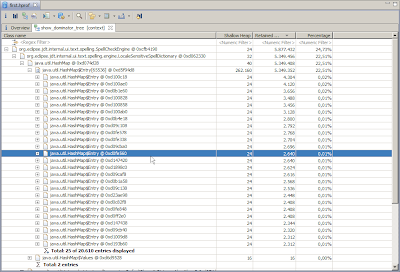

Looking at the spellchecker in the Dominator tree :

reveals that the implementation of the dictionary used by the Spellchecker is rather simplistic.

No Trie, no Bloom filter just a simple HashMap mapping from a String to a List of spell checked Strings :

There's certainly room for improvement here by using one of the advanced data structures mentioned above.

There's certainly room for improvement here by using one of the advanced data structures mentioned above.My favorite memory consumption analysis trick

Now comes my favorite trick, which almost always works to find some memory to optimize in a complex Java application.

I went to the histogram and checked how much String instances are retained:

12Mbyte (out of 22,7), quite a lot! Note that 4 Mbyte are from the spell checker above (not shown here, how I computed that), but that still leaves 8 Mbyte for Strings.

12Mbyte (out of 22,7), quite a lot! Note that 4 Mbyte are from the spell checker above (not shown here, how I computed that), but that still leaves 8 Mbyte for Strings.The next step was to call the "magic" "group by value" query on all those strings :

Which showed us how many duplicates of those Strings are there:

Which showed us how many duplicates of those Strings are there: Duplicates of Strings everywhere

Duplicates of Strings everywhereWhat does this table tell us? It tells us for example that there are 1988 duplicates of the same String "id" or 504 duplicates of the String "true". Yes I'm serious. Before you laugh and comment how silly this is, I recommend you to take a look at your own Java application :] In my experience (over the past few years) this is one of the most common memory consumption problems in complex java applications.

"id" or "name" for example are clearly constant unique identifiers (UID). There's simply no reason why you would want that many duplicates of UID's. I don't even have to check the source code to claim that.

Let's check which instances of which class are reponsible for these Strings.

I called the immediate dominator function on the top 30 dominated Strings :

org.eclipse.core.internal.registry.ConfigurationElement seems to cause most of the duplicates ,13.242!

If you look at the instances of the ConfigurationElement it's pretty clear. that there's a systematic problem in this class. So this should be easy to fix by using for example String.intern() or a Map to avoid the duplicates.

Bashing Eclipse?

Now you may think, that this guy is bashing Eclipse, but that's really not the case.

If you beg enough, I might also take a closer look at Netbeans :]

15 comments:

Interesting, did you file a bug against platform/text regarding the spell checker?

See also bug https://bugs.eclipse.org/227986

Hi Benno,

I have not yet filed any bugs, because I've finished the blog yesterday (or should I say today) night.

Anyway, I plan to file a bug.

Regarding the other bug you referenced. I think I can easily help you out with this, if we get an hprof heap dump, that somehow reproduces the problem.

Regards,

Markus

interesting article that would be great to have on the EclipseZone site. Would you be interested in reposting this? If so please contact me on james at dzone dot com and we can organise it.

James

Excellent article. I did not know it was so easy to analyze a heap dump those days. Really awesome article, keep'em coming!

I beg you to try NetBeans 6.1 too. Download full package and start it. Can you please send me an email then?

Thanks for the comment on my blog. I'm surprised you actually found it ;) The MAT tool solved, in a couple hours, what was a memory leak in our application for over 2 years! I guess it was known we had a memory leak - its just that we didn't have enough traffic back then to cause a performance degradation.

Aside from the sleek UI and blazing speed of MAT, the feature we found most useful is the Path to GC Roots. Performing this exact functionality using jhat never worked for us. Using the IBM analyzer tool on alpha works, we couldn't even get the tool to open our heap dump file.

Thank you for the tool!

btw, the cause of our issue was that our jsp Tag classes (those that extend javax.servlet.jsp.tagext.TagSupport) weren't being released by the TagHandlerPool. The issue here is that our Tag classes didn't have any cleanup code in the doEndTag() and release() methods - now our task is to determine where to set references to null (either in doEndTag or release() ). Thank you thank you

Dear Markus,

Just started to read your blog entirely, I have to say that this is wonderful blog. It seems that I will have a lot of time here today. This is not only a blog about just memory consumptions and influences on java based applications, also very useful blog for people love software engineering. Especially I liked dictionary algorithms mostly.

Keep on this great blog. Thanks for sharing.

@Cemo,

Thanks for the flowers ;)

Markus, so there is 1988 duplications of string "id", but how much physical memory it takes? Wouldn't it make sense to take into the account the overall data sizes?

Eugene,

Sure the overall memory usage of all the duplicated would be interesting. It's little bit complicated to compute how much could be saved.

Nevertheless it's a systematic problem and there's not advantage to implement it in this way.

I'ts not only id, but also name, class,true(!) and a lot more of the strings in the top list, which are obviously unique identifiers.

Note also, that comparing those Strings gets slower (during a search in a HashMap for example) because String.equals does == first, which will always be false if Strings are duplicated.

The fixes are most likely very simple, because there are only local changes in a a few classes necessary (most likely).

The duplicated Strings might also point to duplicates of whole object instances. I haven't checked this.

Sorry, I might have deleted a "real" comment, when deleting spam.

Post a Comment