This time I will take a look at Netbeans (6.1, the Java SE pack) using the exact same setup.

First the overall memory consumption of Netbeans is only a little bit higher 24 Mbyte versus 22,7 Mbyte for eclipse :

Keep in mind that the Eclipse memory usage includes the spell checker which needs 5,6Mbyte, which can easily turned off. Without the spell checker Eclipse would need only 17,1Mbyte

The overview of the Memory Analyzer shows that the biggest memory consumer, with around 5.4 Mbyte is sun.awt.image.BufImgSurfaceData:

Swing overhead(?)

This seems to be caused by the fact that Swing uses java2d which does it's own image buffering independent of the OS. I could easily figure this out by using the Memory Analyzers "path to GC roots query":

So maybe we pay here for the platform independence of Swing?

So maybe we pay here for the platform independence of Swing?I quickly checked using Google whether there are ways around this Image buffering, but I couldn't find any clear guidance that would avoid this. If there are Swing experts reading this, please let me know your advise.

Duplicated Strings again

Again I check for the duplicated Strings using the "group_by_value" feature of the Memory Analyzer.

Again some Strings are there many times :

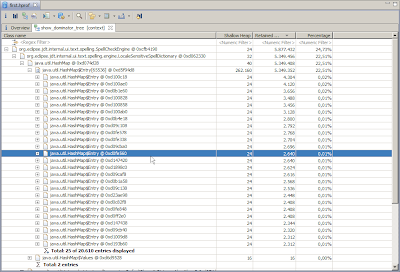

This time I selected all Strings, which are there more than once, then called the "immediate dominators" query and afterwards I used the "group by package" feature in the resulting view:

This view shows you the sum of the number of duplicates in each package and therefore gives you an good overview of which packages waste the most memory because of objects keeping duplicates of Strings alive.

This view shows you the sum of the number of duplicates in each package and therefore gives you an good overview of which packages waste the most memory because of objects keeping duplicates of Strings alive.You can see for example that

org.netbeans.modules.java.source.parsing.FileObjects$CachedZipFileObject keeps alive a lot of duplicated Strings.

Looking at some of these objects you can for example see that one problem is the instance variable ext, which contains very often duplicates of the String "class".

Summary

So at the end we found for this probably simplistic scenario that both Netbeans and Eclipse don't consume that much memory. 24Mbyte is really not that much these days.

Eclipse seems to have a certain advantage, because turning of the spell checker is easy and then it needs almost 30% less memory than Netbeans.

To be clear, it is still much to early to declare a real winner here. My scenario is just to simple.

The only conclusion that we can draw for sure for now is, that this kind of analysis is pretty easy with the Eclipse Memory Analyzer :)